Let's Make Your AI More Reliable

Your Guide to AI Success: Tips for Getting Started.

OpenSesame is a tool that detects AI hallucinations and improves response accuracy for companies using LLMs. Our platform leverages context-based information to detect hallucinations in LLMs with precision. By ensuring accurate and error-free text generation, we provide a reliable solution for enhancing AI outcomes.

Step 1: Sign Up / Sign In to your account

Step 2: Create a New Project + Vector Database

With your New Project, you can start the process of populating your dashboard and homepage with hallucination data.

Vector Database: You also have the option to connect directly to your Vector Database, which will allow OpenSesame to pull ground truth information automatically and detect ungrounded data without issues.

To connect, simply choose your Vector DB provider, enter your Vector Store Key for secure access, specify the Index Name where your vectors are stored, and select the embedding model used to convert your text data into vectors.

However, don’t worry if you prefer not to connect right away, as connecting to a Vector DB is entirely optional.

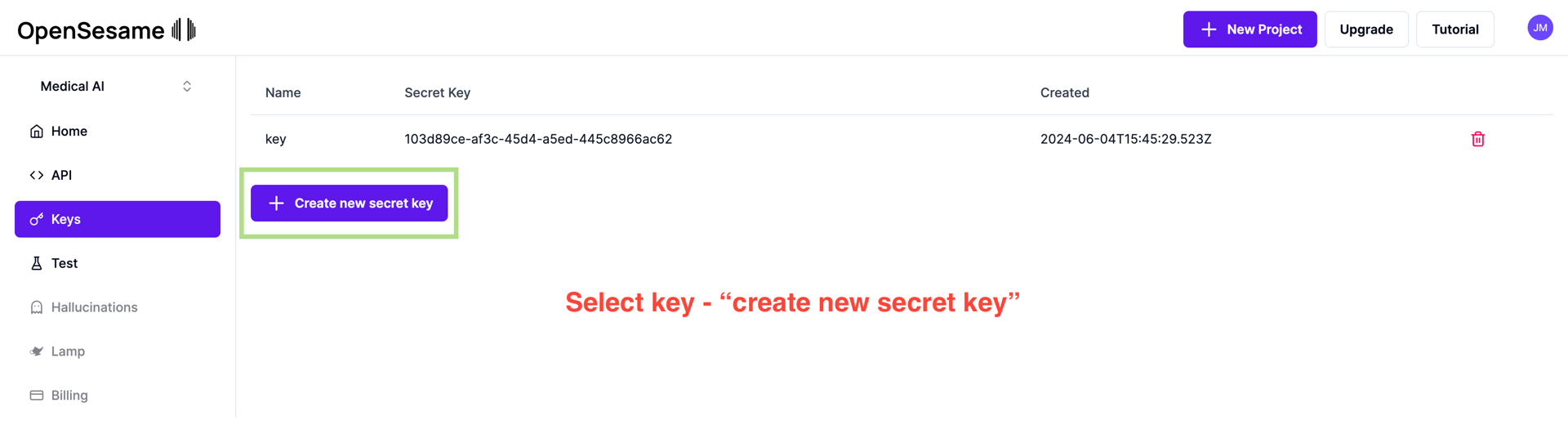

Step 3: Create A Secret Key (Add to Package)

With your secret key, you can start the process of adding the package to your codebase, so we can smoothly detect hallucination data.

Step 4: Install the Package in your codebase

With your secret key and project name, you can install the package into your codebase. All you have to do is change a single line of code, and place it in the "OpenSesame client" and you're good to go!

We intercept your calls to model providers and start detecting/

We offer support for: OpenAI, Gemini, Anthropic, Groq, Huggingface and Cohere, in javascript, python and cURL

Step 4: Displayed graphs on fragments

As soon as the data is collected, we autopopulate your home page with the output stored in fragments so we can plot a graph for you to visualize your hallucinations

Fragments allow you to test prompts against your corpus of documents. Fragments are super important because visualize calls made to your LLMs, and see how it is compared your RAG model if using a Vector DB.

If there are prompts that have the same template, we extract the prompt template and extract variables for you to check

Step 5: Hallucination Dashboard

Your hallucination dashboard showcases references with the paired ground truth values and a complete web search. You can export this file as a CSV and mark the values we detected to give us feedback!

Click on the response to expand and view sources of information and reasoning behind each hallucination data point.

Step 6: Test Playground

.

Test to Fact Check AI Responses - Output from your LLMs

We provide token accuracy, reasoning, and even sources for you to check your data.

Ste